Contents

- Introduction

- Data

- Analysis

- Methodology

- Internals of TrackMania input handling

- Ensuring correctness of presented footage

- Comparing different analog devices

- Footage analysis

- Other replays from Nations leaderboards

- Other considerations

- Results

I Introduction

1. Mechanics

Trackmania United Forever is a racing game in which the goal is to complete any track as fast as possible. Players compete against each other for the fastest times on tracks, and in order to keep records centralized, the community developed a website called Trackmania Exchange. This website maintains a “Top 10” leaderboard for all official tracks released by Nadeo and for hundreds of thousands of user submitted tracks, and is the main leaderboard Trackmania players compete on.

In order to compete on Trackmania Exchange leaderboards, players have to submit a Replay file of their record. The game automatically generates a Replay file upon completing a track, which can then be easily shared and uploaded to Trackmania Exchange. No live video evidence is required when submitting a record.

The record system has been this way since the inception of the website in 2003. But in recent years, with the rise of tool-assisted speedruns, there’s been a growing concern among players that it could be possible to cheat in these conditions. Since players submit Replay files, and not live recordings, how can you tell whether a record was played by human hands or an input script?

Because of this concern, we developed several advanced Replay analyzing tools. These tools allow us to get a better understanding of how Trackmania records were achieved, and whether they were played legitimately or not.

In this document we will present our findings after investigating all official world records in Trackmania Nations Forever and Trackmania United Forever.

2. Motivation

Trackmania’s physics engine is completely deterministic. This means that the exact same inputs will always yield the exact same results (e.g. Press Forward tracks). In 2019, donadigo re-discovered that Trackmania’s Replay files have all inputs stored in them and that they can be replayed again and analysed in-game. The fact that inputs have been stored in the files was known beforehand, but never made use of in real applications.

We later developed a tool that visualized inputs from Replay files onto a display. This display shows which direction and how much a player is steering, and when they are accelerating or braking.

The input display first worked as a great learning resource, as it allowed for high detail analysis of the controller movement in any given record. And by studying the inputs of the world record, players could get a better understanding of what they needed to do to improve on a track.

With the increasing amount of records being analysed, we eventually noticed certain players’ inputs deviating from the “norm”. This ultimately led to a deeper investigation of what was actually happening in these replays.

In this investigation we will focus on detecting & analyzing replays that were done with slow-motion techniques. Intuitively, a replay done at slower game speeds will feature more frequent controller steering and unnatural spikes when approaching key sections of a track. Such techniques can be achieved using software like Cheat Engine to globally slow down the time of the game, and using such software in offline play does not reveal this fact to anyone that inspects the replay file.

3. Objectivity

It should be noted that the authors of this document seek to preserve the integrity of the Trackmania Exchange leaderboards, and are solely motivated by the presence of exceptional empirical data. Any player—regardless of popularity, following, or skill— observed having deviating inputs is held to the same level of scrutiny. The goal of this document is to present an unbiased analysis of the data. We realize that we cannot analyse all replays uploaded to the site and that we cannot guarantee our findings present the entire situation.

II Data

1. Replay dataset

To reduce bias in our selection of replays for closer analysis, we developed a script to automatically form a dataset of replays based on objective factors. TrackMania United Exchange contains ~700 official Nadeo tracks and each track maintains a list of records that were uploaded manually by players. Considering that each track has at least 10 records, analyzing all ~7000 replays would be simply overwhelming. The script automatically extracts inputs from replays and evaluates their relevance based on certain characteristics.

The final United Exchange dataset consists of 33,641 replays scraped directly from TMX. 13,618 of these replays were done on the old United game version which unfortunately is not compatible with the newest version in terms of physics. In this document, we analysed all replays that were done on the newest United Forever version of the game, which totals 19,977 replays. 46 replays from the dataset were not able to be parsed by the file parser. These replays were not parsed due to internal errors in the parser, such as not finding the appropriate chunk containing the inputs or failing to read other important chunks of the file. This does not mean any of these replays are suspicious — it is simply a result of an incomplete file specification the parser uses to parse these files.

The Nations Exchange dataset consists of 5,691 replays. 3 of these replays were not able to be parsed by the file parser.

2. Input extraction & processing

Note: if you want to skip technical details, skip to section 2.4 Results.

Each replay contains a list of inputs and their timestamps that form a timeline of player actions. In this investigation, we focus mainly on replays done with an analog device — a controller, joystick etc. We do however include footage of replays done on a keyboard, that we argue are not possible with fair play.

A steer value in-game can be represented as a number in range of [-65536, 65536] where larger negative values correspond to stronger left steering, and larger positive, to right. A stick in its neutral position corresponds to value 0.

Using this array of values, we can perform automatic analysis to compare players based on their steering behavior.

3. Detecting suspicious behavior

Note: the following algorithm was adopted based on already existing replays we analysed that we determined — were suspicious. The algorithm may favour certain play styles or settings such as frequent tapping instead of smooth steering or higher sensitivity, which both lead to higher scores than their counterparts. We did however tweak the threshold empirically to output only replays that are well outside the average score.

Given an array of integers ranging from -65536 to 65536, we can determine how frequently a player changes the direction of steering in a replay.

It is important to note that the resulting range is not the format in which the game saves the information about steer strength or direction. Before processing, the raw values inside the replay file are translated by the script to fit this range. This translation algorithm is what’s officially being used inside internally, in the game itself. We have verified that the algorithm properly converts every value to its correct representation, and that converting it back results in the same raw value. You can view the implementation of this algorithm and the script used to extract inputs from replays here.

To ensure uniformity, we will use a metric of change of direction per second or spikes per second. The array is partitioned into sub-arrays where each sub-array contains values in a 1 second period.

A spike is defined as a change of direction in which the player steers. An example array containing values [1000, 2000, 3000, 4000, 5000, 4000, 2000, 1000, 6000, 7000] contains 2 direction changes: the first happens at [5000, 4000] where the player previously steered to the right and now they are steering left and the second happens at [1000, 6000] where the player previously steered left and now they are steering right. Therefore, this array would be identified as containing 2 spikes.

Because TrackMania’s physics update rate equals to 100 updates/second, each array can contain up to 100 numbers. We then begin by determining how many direction changes are present in such an array, by iterating over its values and monitoring the current direction a player is steering. If the direction changes, we increase a spike counter and continue the process.

To account for noise present in analog steering, we discard any differences that are less than an empirically chosen threshold value. The threshold used for this investigation equals 2000 or 1.5% of the available steering range.

The result of this process is an array of numbers where each number represents how many spikes happened in the corresponding second of the race. This data allows us to compare players based on maximum or average spikes/second in each of their replays.

4. Results

The following table shows summarized results from all replays (United leaderboards)

| Average spikes/second from all replays | Average of maximum spikes per replay |

|---|---|

| 3.01 | 5.96 |

The following table shows summarized results from all replays (Nations leaderboards)

| Average spikes/second from all replays | Average of maximum spikes per replay |

|---|---|

| 2.87 | 6.53 |

The following table shows top 10 players, sorted by an average of maximum spikes achieved from each replay driven by a player (United leaderboards)

| Login | Average of max spikes/second | Number of replays processed | |

|---|---|---|---|

| 1 | roman9898 | 19.0 | 2 |

| 2 | twstw | 18.0 | 7 |

| 3 | jea | 12.48 | 33 |

| 4 | arti_show | 11.87 | 8 |

| 5 | acceleracer_01 | 11.73 | 531 |

| 6 | overninja | 11.5 | 8 |

| 7 | alzimo.rtg | 11.2 | 5 |

| 8 | grievous97 | 11.08 | 24 |

| 9 | techno | 11.0 | 507 |

| 10 | _00fri_fffco_f00u71 | 11.0 | 3 |

The following table shows top 10 players, sorted by an average of maximum spikes achieved from each replay driven by a player (Nations leaderboards)

| Login | Average of max spikes/second | Number of replays processed | |

|---|---|---|---|

| 1 | dawidnh6 | 11.5 | 8 |

| 2 | jea | 10.66 | 12 |

| 3 | _kluthu_ | 10.42 | 33 |

| 4 | techno | 10.12 | 8 |

| 5 | william_h_bonney | 9.81 | 32 |

| 6 | daniozik | 9.57 | 13 |

| 7 | acceleracer_01 | 9.54 | 42 |

| 8 | rko90 | 9.5 | 1 |

| 9 | promise08 | 9.5 | 2 |

| 10 | queen_jadis | 9.4 | 5 |

You can download the full JSON data that has been used to generate these reports here for United leaderboards and here for Nations leaderboards.

It is important to mention that the final score depends on the amount of replays a player has uploaded to the leaderboards. The script is in no way a measure of judging replays, it is instead a tool to target most suspicious players for deeper analysis.

Results show that all presented players strongly deviate from the average of all replays (6.56 for United leaderboards and 7.14 for Nations leaderboards), with 11.0 average being the lowest in the top 10 of United leaderboards and 9.4 in the Nations leaderboards. This does not necessarily mean that these players cheat, as we deduct from further analysis.

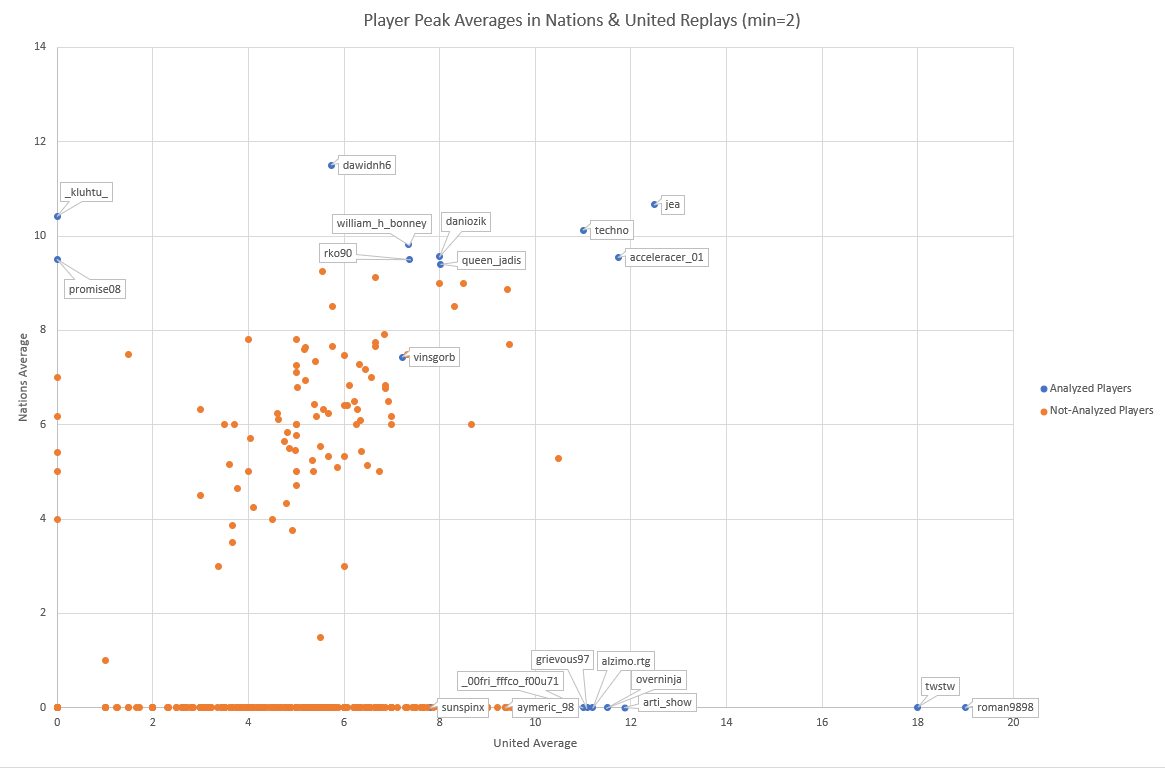

The following chart shows player peak averages, showing players who uploaded at least 2 replays to either site. (Click to enlarge)

III Analysis

1. Methodology

In this section we define the methods we will use to analyse replays. To properly understand what is actually happening in replays chosen from the dataset, we use custom built tools to replay runs and visualize their inputs live. We examine TrackMania’s input handling code and the correctness of presented visualizations and look at movement patterns emerging from presented replays. We further analyse comparison footage to compare different playstyles. Finally, we define distinct groups of input behaviour to identify players who have possibly used external tools to achieve their runs.

2. Internals of TrackMania input handling

Note: if you want to skip technical details, skip to section 2.3 Ensuring correctness of presented footage

Note: the following information contains real symbol names that are used within TrackMania’s code. The knowledge of its innerworkings is a result of reverse engineering game’s code.

TrackMania Nations and United Forever internally describes its subsystems as buffers. Each buffer (internally named CMwCmdBuffer) is responsible for running a certain task, such as graphics rendering, audio handling, input handling, physics simulation and more. Each buffer contains a reference to a function it executes (internally named CMwCmd). The buffers get managed & executed in a loop by another object called CMwCmdBufferCore. The function CMwCmdBufferCore::Run gets called repeatedly from the function CGbxApp::GameLoop. Whenever in a race, a buffer responsible for handling input gets executed every game tick (via CMwCmdBuffer::Run), which is a function called InputRace, contained within the CTrackManiaRace class. Along with this function, another buffer that calls CHmsZoneDynamic::PhysicsStep2 is executed to advance race simulation. Both buffers are executed with a frequency of 100/s, which is the simulation tick rate.

The CTrackManiaRace::InputRace function calls CInputPort::GatherLatestInputs, which in turn calls a virtual function CInputPort::InternalGatherLatestInputs, based on the type of current CInputPort instantiation. The possible backend may be CInputPortNull or CInputPortDx8. Finally, CInputPortDx8 calls DirectInput functions to retrieve the current state of each connected device. The function called is dependent on the “Alternate method” setting in the game’s launcher. If it’s unchecked, TrackMania calls IDirectInputDevice8::GetDeviceData with a static buffer that can hold 32 events to retrieve buffered events that have to be processed. If the function returns that the buffer overflowed, TrackMania sets an internal variable and clears any previous events using CInputEventsStore::ClearStore, but still processes current events returned by the function. If the option is checked, the game calls IDirectInputDevice8::GetDeviceState to retrieve the immediate state for each device instead.

The tool we use in our investigation creates a native hook in the CTrackMania::InputRace function to execute its own code and sets the input state accordingly to the input script loaded and the current race time. It does this so by modifying elements in an array that corresponds to input bindings contained within CInputPort. Because the hook is a native assembler patch/C++ code, it is not possible for it to desynchronize, or not get executed in time. The array is then iterated by TrackMania to detect changes in the input state. Finally, the game applies the input state to the car state ingame. While doing this, TrackMania also generates and appends special events to another buffer called CInputEventsStore, contained within the CTrackManiaPlayerInfo class which tracks the player’s race state. This buffer contains the final set of inputs that gets saved into the replay file when the race is finished. This information is then used to provide validation functionality in-game.

3. Ensuring correctness of presented footage

To ensure that the custom tools accurately reflect the actions of a player in real time, we have prepared clips of comparing live footage with visualizations from the tool. We observe that inputs the game saves closely match the ones from live footage, with very slight differences mostly emerging from the specific controller a player uses and their deadzone settings.

For the following footage it is crucial to understand what the tool does when it shows the inputs of a player. The tool is being loaded with inputs generated by the input extraction script. We then load the track the run was driven on in-game (we specifically don’t use the View Replay feature of the game), and the tool begins injecting input into the game according to the script to drive the car. Another, separate part of the tool reads the input applied to the game and draws a visualization display according to the current state. All of this happens live, as you would drive the race yourself. At no point, does the tool use an approximation or interpolation of inputs. This is essentially what tool assisted speedruns are achieving. No ghost position data is used as a part of this method. If the inputs were not accurate enough, or a bug existed in any of the software handling input injection, the run would not be able to finish with the same time as the original run. This is why the Validate feature works ingame, it replays inputs of a player from start to finish to determine if the simulation matches the ghost of the replay.

4. Comparing different analog devices

Before we analyse footage of suspicious replays, we need to compare different types of analog devices to understand the footage presented. This understanding is crucial in the further process of investigation. Below you can find clips of example inputs recorded with three different analog devices: controller, joystick and wheel.

Controller

Joystick

Wheel

From the footage, we can deduct that joystick and wheel devices are characterized by very smooth steering and very gentle adjustments. On the contrary, controller devices seem to be quicker to operate, as their stick is of much smaller size, which allows the player to move the stick faster than the other devices. Of course, the footage does not cover the wide variety of playstyles present in the community, every player will use their device differently with different settings, paired with different hardware. The footage is meant to serve a rough overview of different types of devices that are available.

5. Footage analysis

In this section we present footage showing cherry-picked replays from the dataset, according to previous results. In this part of the document we exclusively focus on two players — riolu & Techno, as we are aware that those two cases are the most important in our investigation. For other players, view part II.

riolu

riolu is by far the most known player in our investigation, which is why we put great effort into ensuring that our case is robust and that we have considered all options regarding their play.

The average of peaks of riolu’s runs equals 11.73 from United replays and 9.54 from Nations replays. Overall, this is a very high score, considering that in this investigation, this player has the most processed number of replays — 573.

In our analysis, we found out that riolu plays differently depending on the context. Replays driven offline feature suspicious input sequences that cease to exist in online or on-stream play. In offline runs, riolu’s inputs behave completely differently.

Riolu’s controller stick snaps back into place unusually quickly and the movement looks unnatural. Offline runs also feature more frequent, shorter and reactional acceleration releases than online replays. We argue this steering movement, combined with precise play is not achievable regardless of which type of analog device is used. To verify that this is not a result of controller calibration, we tried replicating this behavior through various known software such as DXTweak or JoyToKey. We observed that these programs do not modify controller’s behavior in a way that yields this movement. You can check out other methods we considered in section 3.7.

Replays done on an analog device are not the only source of our suspicions. Over the years, riolu had also uploaded replays to TMX driven with a keyboard device.

One such replay is a run driven on Stadium A3 with a time of 18.37. In the first diagonal turn riolu achieved 12 key presses per second, which is twice as high as any peak of his other keyboard replays. In the presented replay riolu performed (listing actions lasting less than 0.1s):

- 27 key presses lasting 0.03s

- 24 key presses lasting 0.02s

- 10 key presses lasting 0.04s

- 4 key presses lasting 0.05s

- 3 key presses lasting 0.01s

- 2 key presses lasting 0.06s

- 1 key press lasting 0.08s

- 1 key press lasting 0.07s

In the footage we compare riolu’s replay (orange) to a replay driven manually at 30% speed (blue), played back at 100% speed. We observe that both replays feature the same tapping behavior throughout the entire track. Given the characteristics of the track, we argue that it is nonsensical to tap so frequently to achieve such precision.

Since we have access to the TMX database, we also verified that the replay was indeed uploaded by riolu to TMX to rule out any possible ways it could be faked. The replay is driven with the acceleracer_01 login and uploaded in the same TMX login session as other replays done with a controller. The IP address of this login session matches the IP range of other replays uploaded by riolu in that time frame.

The varying playstyle exists within other games as well — namely Trackmania 2, Trackmania Turbo and across multiple years of leaderboards existence.

We have prepared concise playlists totaling over 300 videos of runs driven by riolu, spanning from 2011 to 2020 for you to analyse. The newest run was uploaded on 7th December, 2020. Each video is marked with its context — whether it was driven online, on stream or in official mode. Furthermore, we also prepared many comparison clips which compare these settings directly. We also recommend you to check out comparisons of Turbo replays which feature riolu’s STM runs and live runs.

We argue that riolu had used external programs to modify the speed of the game to gain advantage on other players across many game franchises. This raises suspicion that further offline runs of this player might be cheated, but further analysis of replays outside of this investigation would be necessary for certainty.

100 runs

TMNF Runs & Comparisons

TMNF Side-By-Side Comparisons

Trackmania² Runs

Turbo STM Runs & Comparisons

TM Turbo WR Runs & Comparisons

Admitted Cheaters Input Comparisons

techno

Techno is another very well known player in the United leaderboards, occupying the 3rd place in TMX United Nadeo leaderboards at the time of writing this document.

The average of peaks of Techno’s runs equals 11 United replays and 10.12 from Nations replays. The script used for analysis, processed 515 Techno replays overall.

In this investigation, we do not intend to focus on the history or speculative evidence of analysed players, which is why we judge players only based on available replays and their behavior visible in these records.

Techno’s replays often have extremely frequent spikes that we haven’t been able to reproduce with any of our methods, which we listed in section 3.7. Techno’s records often reach more than a peak of 20 spikes a second and most replays driven by this player consistently show such behavior. The general playstyle is very reminiscent of the one that is executed by riolu. The replays contain little to no smooth steering and the movement is unnatural. Because of the high number of replays with this kind of playstyle, we argue Techno used external software to slow down the game and to set many of today’s world records. We have prepared many more runs of Techno’s records in the spreadsheet for you to check out.

Techno has recently reached out to us, admitting he used external software to drive his replays. He describes he used game speeds of 40%-80%. Techno is also willing to admit it publicly. This makes the case a lot easier to judge, and we argue that it should definitely be accounted for when the TMX crew judges this player.

6. Other replays from Nations leaderboards

To keep this document concise, we decided to skip analysis of replays in this section and summarize our findings instead.

We analysed replays of the top 20 of players from both Nations and United leaderboards.

To summarize, we found out that most entries in the top 20 in the Nations leaderboards do not contain suspicious behavior, except players with following logins: techno, william_h_bonney, and acceleracer_01.

You can check out analysis of William’s runs in part II of the document.

7. Other considerations

As a part of this investigation, we spent a considerable amount of time trying to reproduce suspicious runs through different methods. These methods include increasing controller sensitivity to maximum in-game, changing controller settings, trying to replicate stick movement seen in the clips and using software such as JoyToKey and DXTweak to tweak controller settings. None of our methods resulted in success, with no method coming close in terms of input frequency & precision to any of the players we argue cheated.

We also asked well known players in the TrackMania hunting community to try to achieve the behaviour showcased in presented footage. These players include: racehans, trinity, JaV, Scrapie, Hefest, Demon, Phil, RotakeR, Rollin, riolu and plastorex. We’ve also instructed these players to exclusively focus on the quickest stick movement they could possibly achieve, while also not paying any attention to the track they drive on. Most of these replays by these players were also done on a special Competition Patch that prevents unfair play.

The highest maximum of spikes/second equaled 46 and was achieved by MEFjihr, which is much higher than all replays presented in this investigation.

It is important to note that MEFjihr used the full steering range to achieve this number of spikes in one second, and that he only focused on getting high spikes with his pointing finger. Therefore, he did not drive the map while achieving this score. When we asked them to play with their thumb, MEFjihr achieved a maximum of 15 spikes/second. We also asked the same players to repeat the process but only on one side of the steering range (left or right), as many of the replays we analysed achieve a high number of spikes only on one side of the steering range.

DarkLink was able to achieve a maximum of 14 spikes/second in this category. To achieve this, DarkLink changed his steering approach by controlling the stick with his entire hand. The footage is available here and here. For comparison, players like Techno, riolu and Lanz achieved more than 20 spikes/second while also driving fast times on a track.

It is important to note that in the process, players did not attempt driving precise lines nor concentrating on the track when mashing their stick. Therefore, we argue that even if any of the players we asked achieved the same numbers as analysed players, they would still not be able to consistently repeat this behaviour while also driving optimal lines.

Because of limited resources, we did not investigate the possible effects of hardware/software latency. While this is a valid concern, we believe that any issues related to equipment could not be the cause of such discrepancies between the player action and what the game receives with DirectInput. We argue that even if we assumed operating system lag, game lag and faulty controller, all being present in one system, the steering dynamics would stay completely in tact. This is not what we see in the footage we provide. We also believe that if the issues were so severe, players we investigated would not be able to play the game in such a manner and set their records.

If you own a device you think is faulty, or not fully functional, you can send us a request for clip showcasing your reproduced replay in-game with the input display visible. Contact us.

We also verified if presented replays were driven online or in official mode. Many replays we feature were driven in official mode, however — we also verified that official mode does not detect slowing down the game, nor does it reject replays driven with such techniques. Hence, it is not a viable indicator of whether replays were driven legitimately.

The only way in which we have been able to reproduce presented replays was to use external software such as Cheat Engine to slow down the game and then playback the original run at the original speed.

Vist the TMX Investigation spreadsheet to view all footage we prepared.

IV Results

1. Conclusion

In this document we analysed many players and evaluated their records, based on their actions & behaviour present in their replays. We have identified most suspicious replays from these players by creating tools to automatically infer which players to analyse. We thoroughly analysed available footage and finally, we tried to reproduce this behaviour by examining available software to calibrate controllers and asking other well known players to replicate the presented movement.

After thoroughly reviewing replays of players, we argue that the following players used external software to slow down their game and gain unfair advantage over other players:

- techno

- grievous97 (Lanz)

- acceleracer_01 (riolu)

- william_h_bonney (William B)

- vinsgorb (Vins)

- aymeric_98 (Neko)

- arti_show

We hope that the TMX crew and Ubisoft Nadeo analyse provided evidence in full and that they take whatever actions they feel are the most appropriate.

2. Preventing unfair play in the future

With the rise of tool assisted speedruns in TrackMania, we think that it’s crucial to take concrete actions to prevent players from abusing leaderboards in the future.

This is one of the reasons the Competition Patch was created and support for it is already implemented in TMX. The Competition Patch saves its own metadata about all replays driven by players to detect input injection methods and game speed modification. It provides an easy way to verify replays in-game, just like the built-in physics validation feature. In order to compete on important leaderboards such as Campaign or Classic maps, players have to install the patch for their replays to be approved. We believe this is a good middle ground between disallowing replays without live footage and allowing all replays without any proof. To learn more about the patch, view its official website.

Special thanks

- Kem — for project organization, feedback and data charts creation

- DarkLink — for providing sample replays & footage and providing additional replay datasets from other games to analyse

- Sky — for project support and mental support

- bcp, BigBang1112 — for providing replay datasets

- racehans, trinity, JaV, Scrapie, Hefest, Demon, Phil, RotakeR, plastorex, MEFjihr, riolu and Rollin for providing sample replays

- Kim — for providing additional player oriented replay datasets from Official mode leaderboards